Text Quest

Finding the Sweet Spot

2024 | UC San Diego Health

The Challenge

Does less text above the fold mean more clicks? Stakeholders believed that pages with over 66 characters of copy above the fold distracted users from seeing primary calls-to-action, reducing engagement. Before implementing this assumption across the website, we needed evidence. Could reducing hero text content actually increase CTA visibility and drive more conversions, or would users need that context to make informed decisions?

Business Objectives

Test the 66-character assumption to determine if copy volume above the fold impacts CTA performance

Establish evidence-based content guidelines for hero areas across high-traffic pages

Minimize risk on critical pages while gathering statistically significant data

Build organizational A/B testing capabilities using a new platform and refined processes

Create replicable methodology for future content and messaging experiments across the website

My Role & Responsibilities

As Co-Lead Researcher, I designed the A/B test methodology for a high-traffic page, developed success metrics tracking both primary CTAs and secondary page interactions, collaborated with stakeholders, documented testing processes and results for organizational learning, and presented findings that challenged initial assumptions and redirected future testing priorities.

I worked closely with the Associate Director, Digital Experience to ensure this inaugural A/B test in our new platform would establish robust processes for future experimentation.

-

Study Type: A/B Testing

Test Platform: New A/B testing platform (inaugural organizational test)

Page Selection: High-traffic page to achieve statistical significance quicklyTest Variations:

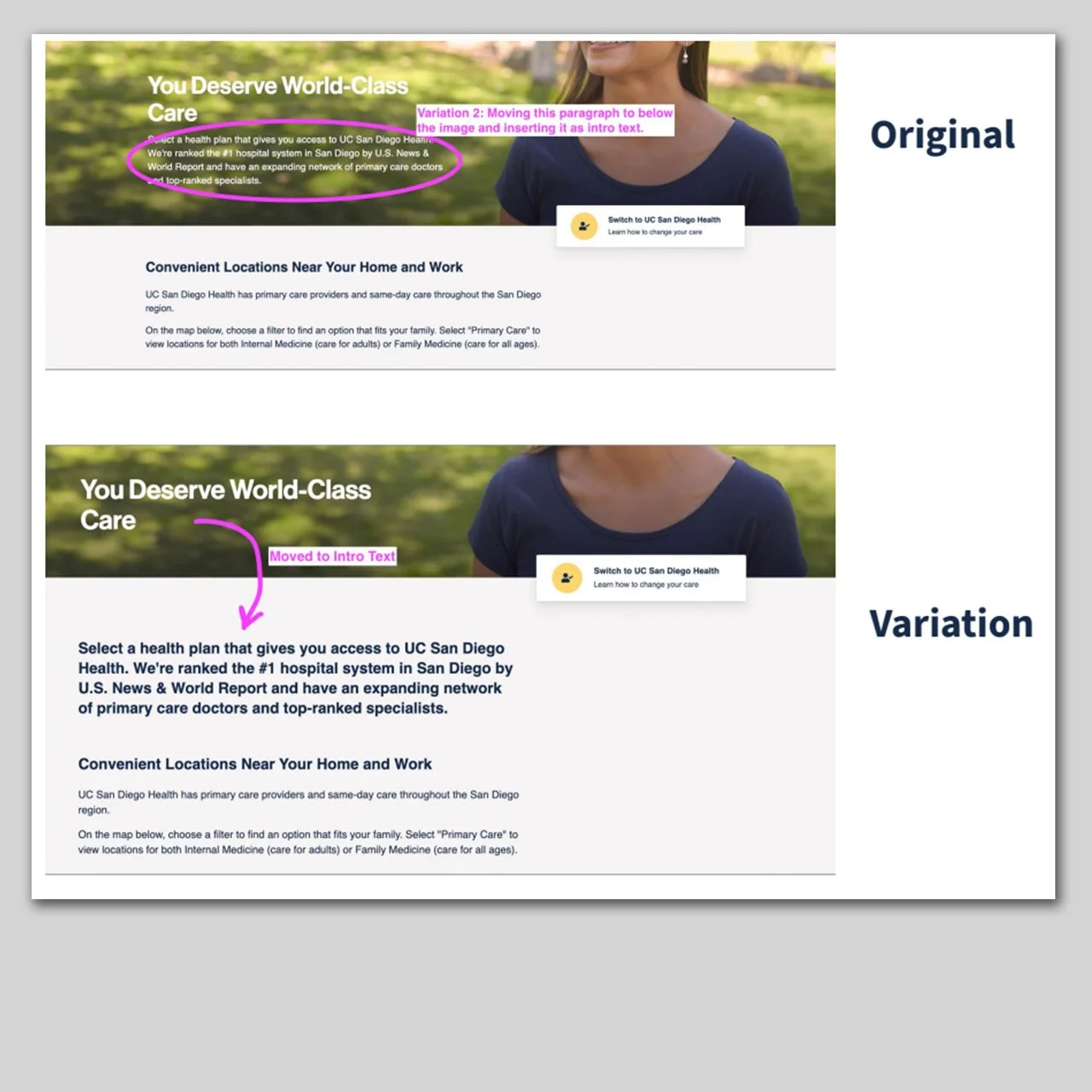

Control (Version A): Original page with full copy above the fold (over 66 characters)

Variation (Version B): Hero text moved below the fold, minimizing above-fold copy

Metrics Tracked:

Primary CTA click-through rate

Secondary link interactions across the page

User engagement patterns with both text placements

Time to statistical significance

Traffic Allocation Strategy:

Dynamic weighting designed to favor winning variation once detected

Minimized exposure to underperforming version to address stakeholder concerns

50/50 split only maintained until clear performance differences emerged

Initial Hypothesis: Moving copy below the fold would increase CTA visibility and drive higher click-through rates on primary actions.

-

The assumption was wrong. The original version with more copy above the fold slightly outperformed the variation with minimal hero text. However, the performance difference between versions was minimal, suggesting that hero text volume has limited impact on CTA performance within this context.

Users benefited from context: Rather than being distracted by text, users appeared to use above-fold copy to understand their options before engaging with CTAs. The presence of explanatory content did not suppress primary actions as predicted.

Secondary interactions remained stable: Moving text placement did not significantly affect other page interactions, indicating users navigate based on their goals rather than content positioning alone.

Statistical significance achieved efficiently: High traffic volume allowed us to reach conclusive results quickly, validating our page selection strategy and minimizing the time users experienced different variations.

-

Strategic Insights:

Disproved the 66-character assumption, preventing unnecessary content restrictions across the website

Demonstrated that contextual copy supports rather than hinders user decision-making

Redirected testing focus away from text volume toward higher-impact optimization opportunities

Organizational Capabilities:

Established robust A/B testing methodology in new platform

Created documentation processes for designing, running, and communicating test results

Built stakeholder confidence in experimentation by addressing concerns about user experience during testing

Developed replicable framework for future content and messaging experiments

Process Improvements:

Refined traffic allocation approaches balancing statistical rigor with stakeholder comfort

Established communication protocols for sharing results that challenge initial assumptions

Created templates for test documentation supporting organizational learning

What I Learned

Assumptions need testing, even when they seem logical: The 66-character guideline appeared reasonable—less visual clutter should mean better CTA visibility. Testing revealed reality is more nuanced. Users seeking healthcare information may actually need context before taking action, making "less is more" incorrect in this setting.

Negative results create positive value: Disproving an assumption prevented implementing changes that wouldn't improve performance. Learning what doesn't work is just as valuable as discovering what does, especially when it stops us from making costly mistakes at scale.

Process matters as much as outcomes: As our first test in the new platform, establishing reliable documentation, communication, and traffic management processes was as important as the findings themselves. These processes now support dozens of future experiments.

Stakeholder concerns can drive better design: Concerns about exposing users to different experiences led us to implement adaptive traffic allocation. This constraint improved our methodology and demonstrated respect for user experience during experimentation—a principle that now guides all our testing.

Building testing culture takes time: This straightforward content test served a larger purpose: demonstrating that experimentation is safe, valuable, and leads to better decisions. By addressing concerns and communicating clearly, I built organizational confidence in evidence-based design.

Why This Project Matters

Digital teams face constant pressure to optimize based on best practices and industry assumptions. This test demonstrated the importance of validating those assumptions within your specific context before implementing changes at scale. By establishing rigorous testing processes and clearly communicating results—even when they challenge initial beliefs—I built a foundation for data-driven decision-making that continues benefiting the organization. Sometimes the most valuable research isn't discovering what works, but preventing implementation of what doesn't.

Research Methods: A/B testing • Quantitative analysis • Conversion tracking • Traffic allocation optimization • Stakeholder collaboration

Skills Applied: Experimental design • Hypothesis testing • Metrics definition • Statistical analysis • Process documentation • Change management • Evidence-based communication